Brandon Lloyd and Dr. Parris K Egbert, Computer Science

In the 3D graphics lab at BYU we have developed a terrain visualization program called DVIEW capable of handling extremely large datasets. Our largest model is a piece of the Wasatch front nearly 100 miles long and 50 miles wide. The original vision for DVIEW was to provide users with fast internet connections with the opportunity to navigate the model on their home PCs. We have developed methods in the lab that make it possible to render the model at interactive frame rates with commodity hardware. Unfortunately, only a fast network connection can handle the enormous amount of data that this application requires. This project seeks to alleviate the problem by compressing the data.

In DVIEW, surface details are provided by texture mapping the geometric model with aerial photography. The texture-map is conceptually just one very large image. However, it is diced up in a large number of square tiles to facilitate random access to different portions of the image. DVIEW utilizes an adaptive resolution scheme to keep the number of tiles for a particular view to a minimum, using only as much data as is needed. For instance, a single low-resolution tile can be used to cover large areas of distant geometry, instead of many high-resolution tiles with details too small to be seen. Because of this approach, only a fraction of the database is ever accessed during a typical viewing session. It comes at a price however. The low-resolution tiles contain redundant information, since they are calculated from the tiles at the base resolution. This makes the tile database and the amount of data downloaded about 25% larger.

It takes between 1.5 s and 2.0 s to download a single 64K tile over a DSL connection, which is far too slow for DVIEW to be useful. My goal with this research was to reduce the tiles to 15- 20% of their original size in order to improve the speed 5-6 times. For DVIEW this is still not enough to achieve the optimal speed, but is definitely usable.

In my research, I found that wavelet compression to be a perfect candidate for our application. The wavelet transform is inherently multi-resolutional. It can generate images at different resolutions without using redundant information. Moving to the wavelet transform alone would reduce the amount of data transferred by 25%. The wavelet transform is also spatially compact which is to say that the coefficients generated by the transform affect only a few pixels. This means that we can continue to use our tiling approach if we are willing to duplicate in each tile a few rows of coefficients from its neighboring tiles. In practice this only adds a few percent to the size of the tile. Finally, wavelet compression provides a smooth continuum in the tradeoff between size and quality which permits us to tune the compression to our specific needs.

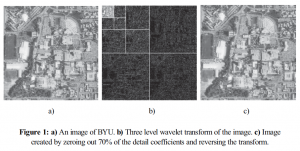

Wavelet compression works by first transforming an image into a two dimensional matrix of coefficients. Each step of the transform splits the image into four pieces, an image at half the resolution of the original and three sets of detail coefficients that can be used to recover the full-resolution image. Repeating the transform a number of times on the half-sized image times creates a pyramid of detail coefficients with a single low resolution image at the top. Figure 1 shows an image and its three level wavelet transform. Most of the detail coefficients, especially in the lower levels of the pyramid, are small. Setting these small coefficients to zero does not have much effect on the transformed image but leaves a very sparse matrix that can be compressed well with a number of methods.

I chose to use a compression method called Wavelet Difference Reduction ( J. Tian, and R. O. Wells, Jr., “Embedded Image Coding Using Wavelet Difference Reduction”, Wavelet Image and Video Compression, P. Topiwala, editor, Kluwer Academic Publishers, 1998.) This method makes no assumptions about the structure of the coefficients and thus can be used on coefficient tiles. The method also creates an embedded encoding which means that cutting off the compressed data stream at any point still yields a useable image. Quality improves as more of the image is uncompressed.

I compressed our terrain database, zeroing out 60% of the coefficients. This yielded image tiles that were nearly indistinguishable from the original but only one-quarter the original size. Together with the elimination of redundancy, the database was compressed with an overall ratio of 6:1.

To demonstrate the improved performance using the compressed database I wrote a small program to navigate through the database. Performance improved most noticeably at the base level where tiles were compressed the most. Due to the nature of the compression, a user can sacrifice quality for speed and download only a portion of each tile. If greater quality is needed in a particular area, the remaining portion of the compressed tile data may be downloaded and the image refined. I was very pleased with the results of this research and look forward to integrating the techniques into DVIEW.