G Thomas Finnigan and Dr. Thomas W Sederberg, Computer Science

Motivation

Image Compression is a way of decreasing the amount of space needed to store or transmit an image by removing redundant information. In many ways, compression it like folding clothing – the result takes up less space, but it not immediately usable. Compressing an image means that it is cheaper to store, and faster to transmit, but may take more time to compress and decompress. The best compression methods compress and decompress quickly, but offer a significant savings in the size of the file.

Background

Compression techniques can be divided into two categories: lossless and lossy. Lossless compression produces an exact replica of the original image. Lossy compression takes advantage of the way that humans perceive information, removing some details that you probably wouldn’t notice anyway in return for a smaller size than lossless compression can provide. Jpeg is an example of lossy compression – any noticeable differences between the original and the compressed image are called compression artifacts. With lossy compression, there is usually a quality threshold that you can select – as the quality goes down, the compression gets better and better, with less and less compression artifacts.

Previous Work

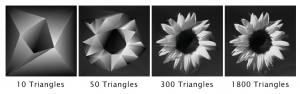

A graduate student at BYU, Dailin Ge, has developed an algorithm [1] that approximates an image using smoothly shaded triangles. The triangles have a color at each corner, and smoothy shade inbetween. The algorithm attempts to reduce the difference between the original image and the smooth-shaded approximation by moving the corners of the triangles, swapping edges of triangles, and adding new triangles. Using a triangular-mesh compression algorithm [2], we were able to show that this compression method could rival the quality of Jpeg compression for the same file size.

Obstacles and Resolutions

One of the problems with the optimization algorithm developed by Dailin Ge is that it takes a long time to get a good approximation. To overcome this problem, we tried several techniques – the first was to begin with a fairly dense mesh of triangles, move the corners of the triangles, and then remove triangles that didn’t help the quality of the final triangulation noticeably. This improved the speed considerably, but came at the price of not always coming up with the minimal approximation, which increases the final file size. The second solution to this problem was to precompute many of the values required, which works well.

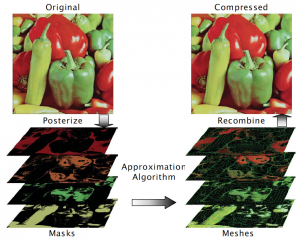

A second problem with the approximation algorithm is that it does not handle curves or detailed patterns well. The solution to this problem is to optionally allow the image to be split up into layers based on posterization, or color quantization, of the original image, and eventually recombined. This produces extraordinary results for images that are well suited to the technique, but worse results for images that are not well suited.

Conclusions

More work is needed to speed up the compression and improve the compression ratio, but this technique is very promising and yields good results so far.

______________________________________

References

- Dailin Ge. Automatic Triangle Placement in View Morphing. BYU Department of computer Science, Masters Thesis, June 2001.

- Jarek Rossignac. Edgebreaker: Connectivity compression for triangle meshes. IEEE Transactions on visualization and Computer Graphics, 5(1), 1999.