Fabian Fulda and Dr. Randall Lund, Germanic and Slavic Languages

Many people have difficulty learning foreign languages, especially when it comes to pronouncing words and phrases exactly.1 A 3-dimesional animated agent, in this case a computer animation which models the motions of important instruments of speech, can be an effective tool for teaching and learning how to pronounce desired words and phrases exactly.

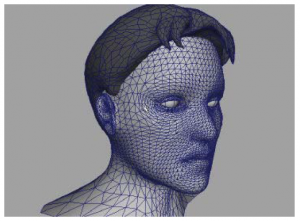

When I began this research I was aware that some agents with a similar purpose already exist and that creating my ideal agent would take longer than a year and consume more funds than were available to me. Actually producing something new with characteristics superior to those of the already existing agents within the given time and money frame was challenging. The research conducted over the last year was mainly to produce the graphical part of the agent with an interface capable of receiving high-resolution vector input for lifelike movement.

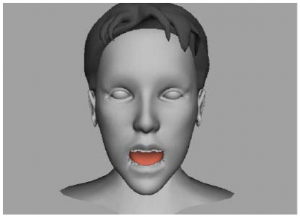

In this document, you will find several screenshots of the result of this project. Completed color and texture mappings for skin, hair and eyes will be added in the final version. To assure real-time processing, the program will permit users to switch this function off while rendering information from an input device, thus allowing instant feedback.

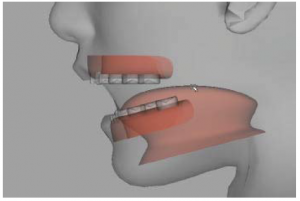

Vector input can be manually entered so that the agent can demonstrate correct usage of the instruments of speech. It is also possible for the agent receive input from a different source, in which case it would need a converter to translate the input signal to suit its vector-expecting interface. To make this possible I have worked with Dr. Fletcher, who has developed a pallet, which measures specific movements of the mouth. The pallet appears similar to an orthodontic retainer with 124 different contact points which detect the presence or absence of contact.

We are currently working on a program which will convert the output signal of the pallet to match the input demands of the agent. To my knowledge, this would be the first agent working with an entirely digital speech/voice input signal.

Some advantages of this agent are its speed, its adaptability, and its openendedness. Given a system with sufficient processing capability, the agent can produce high quality images in real-time. It will be possible to customize the agent to the user fairly easily, and the agent can constantly be improved by adding details and more information without having to change the program, input device or data converter. One disadvantage is, that the agent runs on an expensive software module2, which has to be purchased to run the agent. Another difficulty is that if the pallet is used as an input device it has to be custom made at the cost of several hundred dollars.

One of the main problems the project had to adapt to was the unexpected unavailability of data on the movement and positioning of some instruments of speech. Basically, nothing was published and people dealing with the matter were hard to find, as it is often hard or even dangerous to retrieve the data and there seems to be no real marked demand. This made it impossible to work with a converter which would identify the appropriate phoneme, and then retrieve the needed vector information for movement from a databank. As an alternative, we are working on a converter which will receive the information for movement of the agent directly from the input device. Because Dr. Fletcher’s pallet, the current input device for the agent, only detects contact or non-contact, we will have to work with some kind of interpolation and a corresponding inaccuracy which can possibly be overcome in the future by adding a databank with some compensating information or by extending the input device.

1 Also applies to deaf people and their native language, as their native language is like a foreign language for them

2 It is possible to write a software module which will run the agent as well, which will be cheaper, as soon as the agent would be used in greater number