Morgan Quigley and Dr. Steven Ricks, School of Music

In this project, we sought to create a software system that uses the chaotic output of large cellular automata to create visualizations and sonifications that are aesthetically interesting. Conceptually, a cellular automaton is a group of deterministic cells that are interconnected to form a regular grid. The cells “evolve” in discrete time steps, which occur as the future value of each cell is calculated and then used to replace the current value of each cell. Each automaton and its associated governing functions behave differently: some have a stable state, others have stable oscillations, and others experience chaotic motion at the local or global scale.

In our experiments, we used 2-D planar automata with discrete states. Informally, the update rule used in our implementation calculates the weighted average of the three-by-three submatrix of cells centered on the cell being updated, and then performs a table lookup in a transfer function to compute the new value. Because the transfer function guides the evolution of the automaton, it can be used to describe the characteristic behavior of an automaton and can be controlled by a user to guide the process.

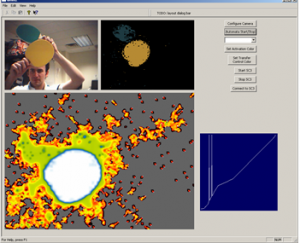

The trick to making automata useful in an artistic context is to create automata that are large enough to be visually and sonically interesting, yet still able to be computed in real-time. To this end, we chose to represent the discrete-state automata as a large matrix of 8-bit integers. The “core” of our software was carefully written in C to be as efficient as possible. The result is a system that is able to simulate planar automata having hundreds of rows and hundreds of columns, at update rates of approximately 10 Hz. To make the automaton more visually interesting, we use a false-color lookup table to dramatically emphasize the various activation levels of the cells. This is shown in the screenshot on the next page.

Allowing users to interact with the automata is critical in producing an artistic result. We are of the opinion that incorporating humans “in the loop” of the creative process is what turns mechanized sound into music. Space considerations in this paper prohibit us from detailing all of the interaction schemes we created, but this list summarizes how users can interact with the automata:

• Local disturbances are created where a user clicks the visualization with a mouse

• A “trail” of local disturbances is created when the mouse is dragged

• Neighborhood matrix alterations are produced by deflecting a joystick, resulting in uneven propagation of state (i.e. activation patterns “slide around” on the automata surface)

• The automata can be “wiped clean” with a mouse right-click

• Transfer function mutations are created by dragging the mouse on the transfer graph

In addition to what can be done with the traditional computer input devices in the previous list, our software can perform real-time video processing to generate inputs. We used a common technique called color segmentation to capture information from the video stream, where one or more target colors are “pulled out” of an image. We found that many commonplace objects function as excellent color targets. Among them are brightly colored scraps of paper, ping-pong paddles, toys, and so on—any solid, saturated color source.

Once the target colors have been defined for whatever object(s) are in use as markers, the color segmentation algorithm filters the incoming video in real-time and feeds the result to the automata. In our experiments, two target colors are used. One of them functions as the “activation” color: whenever this color is seen in the input image, a corresponding point disturbance is made in the automata. Large blobs of color produce large blobs of high activation in the automata, as shown in [figure].

The other target color in the input video stream is used to alter the transfer function. The center of mass of this color is used to “tweak” the transfer function. Each transfer function will respond differently to being pulled in various directions; in [figure], the lump in the transfer function causes the yellow-green “fingers” to grow out of the white disturbance.

Mapping the activation patterns of a cellular automaton to an audible sound stream is a daunting and highly arbitrary task that can be performed in a myriad of ways. We have experimented with a variety of mapping schemes, including the following:

• Creating MIDI pitches and volumes by averaging rows and columns of the automata

• Averaging quadrants of the automata to produce pitches of four MIDI voices

• Using a global average to drive MIDI events

• Feeding various activation averages to SuperCollider, a software synthesizer

The strategies listed above produce very different types of sound. Because the output of the automata is simply a stream of integers, the possibilities are endless.

In summary, algorithmic composition using cellular automata has been shown to work in real-time on standard personal computers. As demonstrated by our experiments, cellular automata can to create visual information, which can then be pushed through a variety of image processing and sound synthesis techniques to create streams of audio and video. The output of these systems is fascinating and intriguing, especially when the process is guided by a human performer.

We intend to publish our experimental software on an algorithmic composition website we maintain: http://www.universalmusicmachine.com