Nathaniel Bean and Dr. Bruce Brown, Psychology

The opportunities that opened up as a result of receiving an ORCA grant have been great. Because of the funding that was made available, I was able to work on three different projects simultaneously and find success with all of them. The primary reason that this was astounding was that at the same time, while I had been taking a full load of classes, I applied for and was accepted into a respected psychological doctoral program. Without this funding, I would not have been able to provide sufficient focus to complete these research projects. All three projects were submitted to the annual conference of the American Psychological Association but were not accepted, this was surprising as a previously accepted project to the same conference the year before on the same topic was less academically important. So to ensure that these projects were displayed, we applied to and participated in the annual Mary Lou Fulton Student Mentored Research Conference. And although we have yet to submit project write-ups for publication, it is our plan to do so this coming spring. The results of these projects were as follows.

Project 1

This project used a sample of the popularly used business improvement surveys to determine how well survey creators are following current research to provide the best possible information. Our analysis revealed a field that lacks any standards, despite of the research that has been conducted. This anything-goes methodology results in a large waste of time, money, and effort that could easily be counteracted by thoughtful construction and application of these surveys.

Although this was in line with our original hypothesis, we honestly were not sure as to what we would find; so little research has been done on this question that we had no inclinations. Future research is needed to determine if different surveys are more effective in different settings.

Project 2

This project used a representative sample of the surveys collected in project 1 to evaluate fictional characters in order to determine which type of surveys best conveyed the desired information. Furthermore, we compared these lab-derived results to results derived from a business setting that were taken from previous research (Otto et al., 2005) to see if there was a difference in the perception by academic and business participants in the effectiveness of different types of surveys. Results indicated that academic participants were more negative about the surveys in question and saw differences in the effectiveness of the different surveys (while business participants did not). Lastly, word-based surveys were seen very differently in the eyes of the different participants; academic participants strongly believed in their effectiveness while business participants strongly believed in their inefficiency.

These findings were in line with our original hypothesis. Future research could use larger sample sizes with a more diverse group of participants to determine if this finding represents the business and academic communities at large.

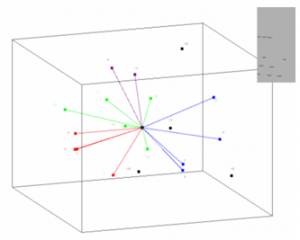

Project 3

This project utilized a very interesting and creative statistical procedure called multi-dimensional scaling to help reduce the number of different categories that are used in multi-rater surveys as many of these categories overlap with each other. The 72 instruments collected in project 1 were dissected to produce a list of 281 different categories. Different groups worked first independently and then later together to reduce this list to 19 different non-overlapping categories. These categories were then rated on their differences between each other. Although this may sound strange, multi-dimensional scaling uses these scores to determine the position of each category in relation to each other. To provide an example, if you entered in all the distances between cities in the United States this statistical procedure would produce a map of the United States. The model produced using the category differences data resulted in several categorical clusters, but most importantly the position of the leadership category lead us to believe that this procedure was effective in its purpose. It was in the center of all of the other categories, this being the definition of leadership itself. Future research could assess whether these 19 categories are sufficient enough to convey all of the information that is desired on a multi-rater survey.