Ben Pence and Dr. Scott Thomson, Mechanical Engineering

During speech, the vocal folds vibrate resulting in audible sounds. In addition to being transmitted through the vocal tract these vibrations are also transmitted through several layers of various types of tissue throughout the head and neck, resulting in small, but measurable, skin surface vibration. Contact microphones sense these skin surface vibrations for speech transmission as opposed to acoustic microphones that sense air vibrations that radiate from the mouth.

The purpose of this study was to experimentally gain an understanding of how the frequency content of signals found on the head and neck of a male subject compare to the signal received by an acoustic microphone. To test the frequency response of the skin during speech, small accelerometers (conduction microphones) were attached to 13 locations on the face and neck. These accelerometers measured the magnitude and frequency of the skin vibration at each location. From this information, it could be determined which frequencies or ranges of speech that are best transmitted at the various locations.

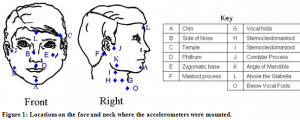

The anatomical locations at which the accelerometers were placed in this study are shown in figure 1. Locations included the chin, the side of the nose, just above the upper lip (Philtrum), the temple, the cheekbone (Zygomatic base), behind the right ear (Mastoid process), directly over the vocal folds, to the right of the vocal folds on the sternocleidomastoid, above and to the right of the vocal folds on the sternocleidomastoid, directly in front of the ear (Condylar Process), on the jawbone (Angle of Mandible), and on the forehead (Above the Glabella).

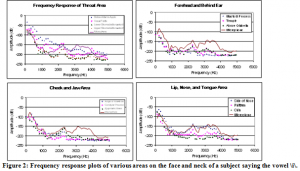

Frequency responses of the various areas are displayed in the following graphs

The above plots show the frequency responses of various areas on the face and neck of a male subject saying the vowel \i\ as in feet. The skin vibration signals that were most like the microphone signal were the nose, the philtrum, the forehead, the temple, and the chin. The nose signal was most like the voiced signal, however, it was noted that the signal from the nose had a greater amplitude when the mouth was closed than when it was opened (like in the vowel \i\). Therefore, when phrases such as “The birch canoe slid on the smooth planks” etc. were voiced, the signal from the vibration on the nose alternated between high and low amplitudes, and though the signal was like the microphone signal in the frequency, it was unlike the microphone signal in amplitude. The signal from the forehead had a lower amplitude than that of the nose, but was similar to the microphone signal in frequency. Unlike the nose, the signal amplitude remained steady whether the mouth was closed or opened. This same result (meaning steady amplitude) applied to all signals from the skin beside the nose.

The frequency content of the skin on the philtrum was also like that of the microphone, however, some of the higher frequencies (2000+ Hz) were slightly attenuated. The signal on the chin was like that of the philtrum but was more attenuated in the higher frequencies.

The frequency response plots from various locations on the throat area were the most unlike the microphone signal. Unlike the forehead, philtrum, temple, and chin locations, the signal was not just attenuated in the higher frequencies, but contained frequencies that differed from that of the microphone signal.

In general, signals on the face had a frequency response more like the signal from the microphone than the signals from the throat area, but in both cases, the higher frequencies were attenuated by the tissues on the head and neck. The amplitude of the signal tended to be more attenuated as the distance from the throat and mouth increased.