John Akagi, Brady Moon, Jared Paxton, and Dr. Cammy Peterson, Electrical and Computer Engineering

Introduction

The usage and application of small unmanned aircraft has grown in recent years, but training, and constant attention is often required for controlling an aircraft in even the simplest of tasks. Our research seeks to reduce the complexity in controlling drones by sending high level commands to them using unique hand gestures captured by a glove with an inertial measurement unit (IMU). This would allow the aircraft operators to maintain focus on a primary task while easily supplementing decisions and actions with aircraft data. In contrast to low level commands, such as directing drone velocities and control surfaces, high level commands could instruct the aircraft to move to a specific location relative to the user or to execute a certain preset maneuver. This project is designed specifically for the use of law enforcement and/or military personnel to give them added situational awareness.

Methodology

For the controller, we used an Arduino Nano in combination with an MPU-9250, a 9-axis compass, gyroscope, and accelerometer inertial measurement unit (IMU). The IMU communicated with the Arduino over an I2C connection and data was received at approximately 100 Hz for all sensors and axes. The Arduino acted as an interface between the IMU and an offboard computer which collected and processed all data.

For this research, we selected three gestures based on the intuition of the gesture, the uniqueness of the gesture, and the diversity of our gesture set. The three gestures that were chosen were 1) a raised fist moving in a counterclockwise circle, 2) a palm down counterclockwise circle, and 3) a repeated pointing gesture with the palm of the hand vertical; each of which could be mapped to meanings that were intuitive. The individual gestures were unique but there were similar elements, such as circular motions and hand orientation, that were common between multiple gestures. This allowed us to test how difficult it would be to differentiate between common elements.

While collecting training data, we gathered about 85 seconds of data at a rate of 100 Hz for each gesture. The data was generated equally by two people in an effort to remove biases that might arise in the training data. The data was then split into segments using a rolling window so that each segment was offset by .01 s from the others. Fifteen percent of the data was set aside for testing while the other eighty five percent was used for training. Initial evaluation of the training data revealed that a one-vs-all logistic regression was both more accurate than a three-layer neural net so it was used for all further testing. The data was converted to the frequency domain using a Fast Fourier Transform (FFT) and used to train a logistic regression with data segments of various sizes.

Results

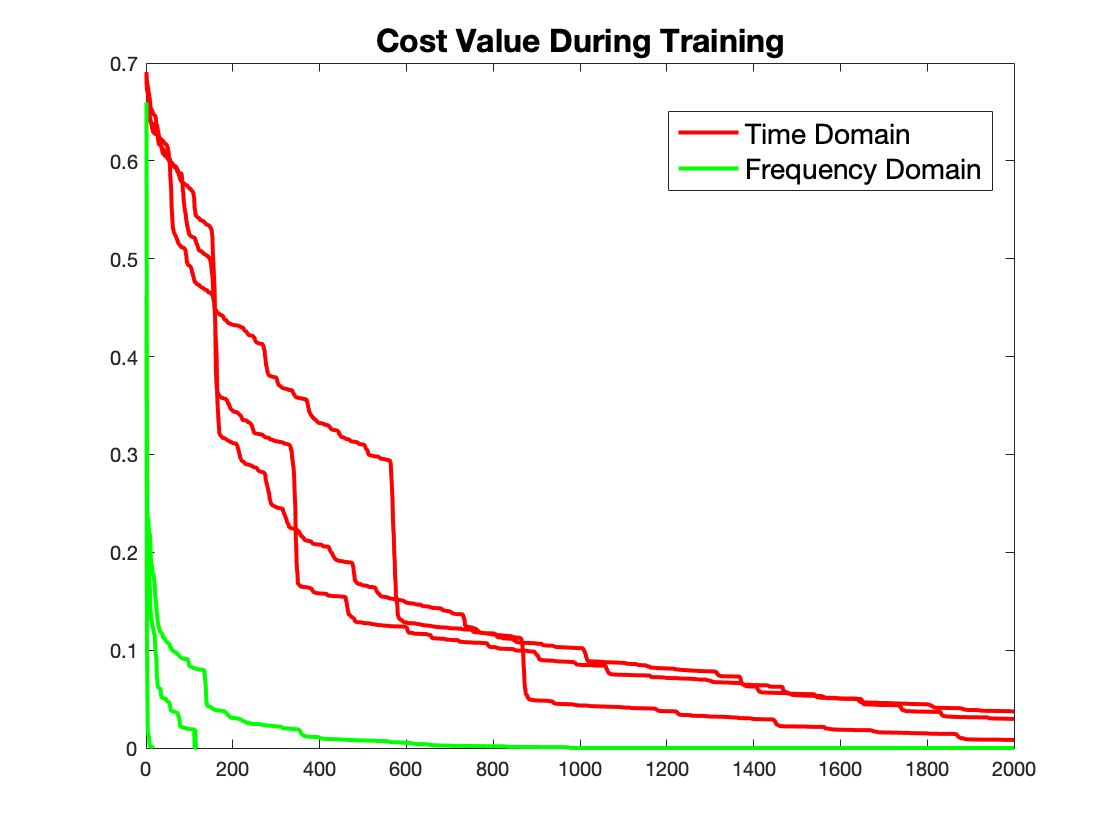

Table 1 shows the testing results of the various methods. Using a logistic regression on the FFT data yielded the highest accuracy of gesture classification. Even with a limited time window of .25 s, it was able to classify the gestures with high accuracy. Figure 1 shows the cost at each timestep for training the logistic regression on the three gestures in the time and frequency domain. It shows that the FFT data provides better features for delineating between gestures and minimizes the cost function much faster.

For implementation in hardware, we didn’t want the classification to predict any false positives. To prevent false positives we implemented a simple thresholding function which only allowed classification when the confidence of the prediction was above a certain percentage. When the thresholding was applied on the FFT method, it rejected 11 out of the 309 classifications. Four of these were incorrect classifications, and the others were correct but had a low level of confidence. By rejecting only 11 classifications, it raised the accuracy of predicted gestures to 100%.

Discussion

The accuracy of the training sets show that different hand gestures can be differentiated with a high degree of accuracy (99.2 %), even with a small data sample. A simple thresholding value improves the accuracy to near perfect with only about 3.6% of gestures being dropped. Since the data sets are .25 seconds and most gestures take about a second to execute, each gesture is evaluated multiple times while it is executed. This provides redundancy so that even if data sets are dropped, a gesture can still be identified correctly.

Since we have proven that different hand gestures can be identified with high accuracy, the next step in this project is to program drone behaviors that correspond to hand gestures. For example, a palm down circle could indicate that the drone should fly directly back to the user. These behaviors will be developed and tested in simulation and then demonstrated on hardware.

Conclusion

We have shown that different hand gestures can be classified with high precision using a fast fourier transform and one-vs-all logistic regression. Accuracy was increased by requiring that a certain threshold was reached before positive identification of the gesture was determined. Further efforts in this project will work to map drone behaviors to gestures.

Figure 1 – This table shows the accuracy of the various methods and data set sizes

Figure 1 – This graph shows the cost value at each time step in training the logistic regression. There are three gestures being classifies with the time and frequency domain data. This shows that using data in the frequency domain provides a better feature set when classifying these gestures.