Parker Williams and Jeff Jenkins, Information Systems

Introduction

The percentage of individuals using mobile phones to respond to online applications, forms, and surveys is predicted to rapidly increase in the future. As of June 2, 2015, there were an estimated 2.6 billion smartphones in use in the world; by 2020 there will be 6.1 billion smartphones in use. Smartphones will account for roughly 80% of all mobile driven data traffic by 2020 (Lunden, 2015). With the proliferation of smartphones, measuring the fidelity of information gathered from and about the user becomes extremely important. Smartphones are a rich source of behavioral and biometric information, gathering data about how the person is moving, where the person is, the biometrics of the person, and how a user is answering questions.

We propose to use data gathered from smartphone sensors to detect indecision and fraud, specifically in online applications, forms, and surveys. This research will prove to be especially useful to industries who rely on receiving information through web forms or survey’s. Industries such as the loans industry, insurance claims industry, and insight industries (e.g. Qualtrics, Survey Monkey, and SMG) will have an exponentially larger number of individuals using smartphones to fill out online applications, forms, and surveys.

Methodology

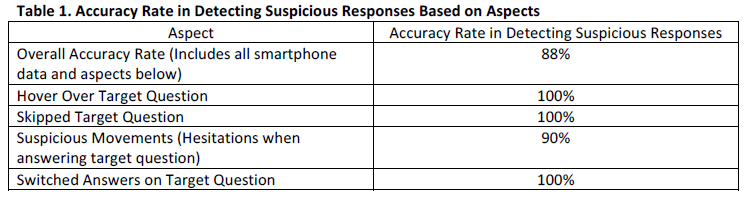

To detect indecision and fraud we specified an algorithm to detect suspicious movements based on answering behavior and timing. For answering behavior, we looked at if people switched answers, skipped and then revisited questions, hovered over other answers (only available on some devices that use polarization of charges in conductors to detect touches), and closed the screen. For timing, we looked at average tap time (the difference between when someone starts pressing the screen and releases the screen) and total response time for each question to detect hesitation. Based on pilot studies, we did not include accelerometer or gyroscope data in this algorithm as it did not reliably differentiate between truth tellers and deceivers based on the statistic we calculated. In our future research, we will explore the utility of accelerometer and gyroscope data and stats in detecting suspicious responses.

We evaluated our algorithm by calculating its accuracy in detecting suspicious responses on smartphones. To determine which responses were suspicious, we had a human coder analyze the replay of every response. Using a set of criteria, the human coder judged whether the response showed hesitation or suspicious behaviors.

The data evaluated was from an experiment we designed and ran where participants were motivated to misrepresent answers on a survey. The experiment was broken up into 4 sections; (1) experiment instructions, (2) reasonable intelligence test, (3) demographic and integrity check questions, and (4) questions to evaluate participant feelings about the reasonable intelligence test. The experiment was run via a Qualtrics survey.

Part one provided participants with an overview of the experiment, an explanation of compensation, and an integrity agreement. Participants were asked to agree to the following integrity agreement, “This means that you should not ask anyone for answers, that you not search for answers on the Web, or use any other source to determine your answers.” Along with this survey we constructed outside websites that contained all the answers for the Reasonable Intelligence Test.

The reasonable intelligence test asked a total of 10 questions that were randomly chosen from a bank of 29 questions ranging in difficulty from easy to impossible. Participants answered these questions before moving on to part two.

Part three consisted of answering demographic questions and answering integrity check questions. We analyzed smartphone data from the integrity check questions to determine if participants fraudulently filled out the reasonable intelligence test by using outside assistance.

Part four asked five questions that evaluated participant’s feelings about the reasonable intelligence test. Participants were awarded BYU SONA lab extra credit for participating. Participants were also entered in to a raffle to receive one of four $20 Amazon.com gift cards.

Results

The following results were derived from the human coder’s analysis and smartphone data received from participants while answering questions. The results represent the accuracy of each aspect of detecting a suspicious response. See Table 1 for accuracy rates.

Discussion

Based on the results above we have successfully determined that we can detect suspicious responses filled out on surveys/forms via smartphone data. These results provide the ground work for us to continue expanding the research of what can be learned from smartphone data.

Conclusion

In conclusion, we have successfully determined that we can detect suspicious responses using a smartphone. Future research will delve further into sensor data derived from the accelerometer and gyroscope sensors. This future research will prove invaluable as use of a smartphone to answer online forms and surveys rises. We hope to continue to make impactful contributions to this area of online form/survey research and sensor data research.