James Carlson and Dr. Teppo Felin, Organizational Leadership and Strategy Department Marriot School of Management

Crowdsourcing is a term used to describe an emerging new set of activities that organizations engage in to leverage the power of the Internet in bringing people together. Crowdsourcing generally consists of placing some type of “open call” on the internet. People around the globe with internet access then respond to the call. Some collaborate and/or compete to create new ideas, services, or products on behalf of an existing company. Others refine existing work or data, such as through feedback, data entry, or proof-reading.

This new phenomenon has the potential to change the way business is done. However, few of the possible applications have been tested. For example, nothing is known about how managerial decision-making might be bettered through the use of crowdsourcing. I gathered one idea from an experience statistician Francis Galton had in 1903.

While at the equivalent of what we refer to today as a “stock show,” Galton happened upon a competition, observing a startling phenomenon1. Participants were submitting their guesses about how much an ox would weigh, with a prize for the closest guess. Curious, Galton received permission to average the guesses of all 787 participants. Amazingly, the collective guess of the crowd, 1197 lbs., missed the true weight of the ox (1198 lbs.) by only a single pound!

Clearly, if the collective intelligence of a group of non-experts can result in such amazing accuracy, there are implications for management decision-making. Most of today’s managers are charged with directing an organization that is itself complex itself, but also operates in a complex constantly changing environment. Responses and solutions to problems are constrained by limited resources of time, people, and/or money. Furthermore, businesses are increasingly being asked to “think outside themselves”—making management responsible for their organization’s connections to various other entities.2 As if dealing with present issues was not difficult enough, many managers must make predictions about the future (e.g. What are my competitors doing? What are the coming economic trends or forces and what do they mean for my business?)

Thus, accurate information and estimations are prized and valued in management. Businesses want those who are most able to navigate the environment. And, in the past, that has meant a small group of experts (who have MBA’s, experience, etc.). The rise of crowdsourcing raises an interesting question, however: could a large group of average people run a business just as effectively as a small group of experts? Thus, the goal of my project was to ascertain if the advantages Galton observed in the ox weight competition could be applied to management decision-making through crowdsourcing.

To follow the theoretical underpinnings of crowdsourcing, my experiment was different from usual surveys or experiments. For example, I did not need a sample representative of a given population; I simply needed to place an “open call” on the internet. In total, 153 people took the survey. Some friends and classmates were motivated out of good will. To generate a larger dataset, the survey was placed on Amazon’s Mechanical Turk crowdsourcing site, with a reward of $1 for participating. I also had two BYU business professors take the survey as well, constituting the small group of experts necessary for the study.

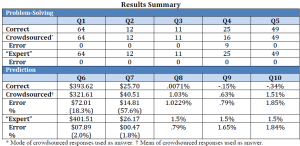

The survey consisted of problem-solving and prediction tasks. The problems to be solved came from a British MENSA (high-IQ society) exam, and I developed the prediction questions, which asked about stock prices and weekly changes in macro-level indices such as the DJIA and S&P 500. In the results summary below, the correct answer is displayed along with the aggregated “crowdsourced” answer and the averaged “expert” answer.

In the end, my hypothesis that the crowd would far outperform the professors was not correct. In gaining experience with research, I can see a few problems that I could not see at the outset of my project. For instance, it would have been a superior survey design to place a single (large) prize for the most correct answer, rather than to pay people for survey completion. I think this could have significantly changed the results by providing a greater incentive for correct answers.

Obviously some things that cannot be crowdsourced (e.g. What is the best surgical procedure for this patient?) However, within the realm of business, managers would do well to figure out what they may be able to do more efficiently and effectively with the power of the crowd.

Author’s note: This project was presented at the National Conference of Undergraduate Research on March 30, 2012. I was recently accepted into a business management Ph.D. program, in large part due to the research experience I was able to gain as an undergraduate. This project helped me learn invaluable lessons about conducting research.