Richard J. McMurtrey and Dr. Donovan Fleming and Scott Steffensen—Psychology and Neuroscience

The purpose of this research was to explore the mechanisms of how the brain is able to distinguish harmony and dissonance. The understanding of how sound is converted to neural messages began with Békésy, who discovered how sound is transduced in the cochlea. Later it was found that each auditory neural fiber, which carried the messages for a specific frequency of sound, corresponded to a specific place in the primary auditory cortex (referred to as “tonotopic”). In this experiment, the neural activity of the auditory cortex (T4) and of the sensory cortex (CZ) was recorded in response to specific auditory stimuli using electroencephalography.

Electroencephalography is the measurement of extracellular electrical activity of the cortex. It is useful because it is non-invasive, it records the first milliseconds of response, and it has become an accurate tool with improvements in amplification and filtering techniques. It is a measurement of phase-locking (synchronous neural activity) in the specific area of the recording electrode. The electrical signal is recorded subtracting out a reference of random electrical activity (taken from the ear lobes) and then amplifying the signal. Appropriate band-pass filters are applied.

According to Helmholtz’s psychoacoustic theory of consonance and dissonance perception, the summation and negation of the wave pattern amplitude (i.e., phase interference) of dissonance is such that it creates “beats,” or fluctuations in amplitude of the total waveform, which are recognized by the brain. It has been suggested that the auditory cortex senses these beats and thus is the center for dissonance perception. The fact that dissonance has produced greater phaselocking in the auditory cortex in a couple of experiments has substantiated this theory.

However, beats only account for dissonance perception for frequency separations of less than 50 Hz (a minor third interval at standard listening frequencies). After a separation of 50 Hz, there is an effect called combination tones. For much the same physical and physiological reasons as the beat effect, combination tones result for frequency separations greater than 50 Hz. When two notes are played, one will also hear a tone that is the summation of the two tones’ frequencies (sum tone = f1+f2), and a tone that is the difference of the two (= |f1-f2|). Certain timbres and waveforms can make this effect difficult to perceive, but it will invariably occur to some extent (the effect is much more audible with sine wave tones in the 1-2 kHz range). Thus it may be that the brain also judges harmony and dissonance by the relation of the sum and difference tone.

Since individual nerve fibers connect at certain regions all along the cochlea, two tones sounding together may either fire the same nerve, two nerves that are close together, or two nerves that are far apart, depending on the separation of the two frequencies. This is the basis of one aspect of my research project, which is analyzing the differences between narrowly and widely separated tones. These different nerve fibers correspond to certain places on the auditory cortex. Therefore, if the brain discerns dissonance according the beat effect, the EEGs theoretically should give different results for harmonious and dissonant stimuli depending on whether the tones are largely separated or not. If, however, the brain uses some other mechanism of harmony and dissonance perception, the frequency separation should be insignificant.

In order to control for random responses, subjects listened to two tracks of 160 two-toned sounds. One track was target for harmony, the other target for dissonance; target tracks played the target stimulus 40% of the time, and the non-target stimulus 60% of the time. This allowed target or non-target responses to be compared—significant results should be the same regardless of whether a stimulus was target or non-target. Each sound was classified according to its proper variables, and the data for each variable was averaged for each subject. Complex waveforms (synthesized piano and violin) were used for the sound stimuli, as opposed to pure tones, because musicians have been shown to have greater cortical responses to an instrument sound than to pure tones. There were 26 subjects who participated in the study (10 females and 16 males; 10 musicians and 16 non-musicians; of the musicians, six were females and four were males). Statistical significance was calculated using standard t-tests for p-values.

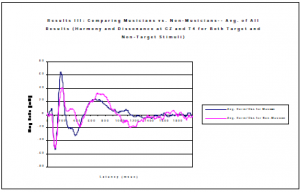

The results for this study are divided into four sections: I) Harmony vs. Dissonance, II) Widely vs. Narrowly Separated Frequencies, III) Musicians vs. Non-Musicians, and IV) Males vs. Females. In all results, the outcomes of each variable were about the same for both target and non-target stimuli, thus substantiating the significance of the results.

I) All waves are nearly identical, except for harmony which has a slightly delayed P300 and a broad, high amplitude P600-900 (mainly in T4), which is significantly different (p=.0077).

II) All peaks are nearly identical, except for a slightly shifted P300, and, again, an unusual peak in activity at about 700 ms. However, in this case, the difference for this peak is not significant, with a p-value of .1144.

III) These results are the most dramatic, with a much larger P200, but a much smaller P300 and P600-900, for musicians (p

IV) Males show longer latencies than females, as well as smaller P200, P300, and P600-900.

In conclusion, the complex tones had opposite effects of pure tones: harmony rather than dissonance induced a higher amplitude P300. The P600-900 is unusual, and may represent a conscious component of activity. The significant differences in auditory evoked potentials for harmony and dissonance, and the insignificant results of the widely and narrowly separated tones, suggest that there is some other mechanism at work for distinguishing harmony and dissonance besides a simple sensing of dissonant beats. It remains to be seen whether this mechanism involves a fundamental characteristic of harmonious frequencies, or whether it is a fundamental or learned characteristic of the brain. There were also significant differences in activity between musicians and non-musicians and between males and females.