Cannon, Rachel

Loh, Amanda

The Challenges and Solutions of Online Surveys

Faculty Mentor: Dan Dewey, Department of Linguistics and English Language

Surveys are a valuable tool in research. However, there is currently no place where researchers

can find compiled information about the challenges of online surveys and their corresponding

solutions. Research publications typically address a specific single issue, and these articles are

published in a wide range of journals, making it difficult to access various findings. Our findings,

which we presented as a work in progress at the Georgetown University Round Table (GURT)

2016 conference, provide a useful source that researchers can use to easily learn about the

challenges of online surveys and the solutions to these challenges, helping them to maximize the

fruitfulness of their online survey research.

After researching various articles, we determined that a few of the general issues regarding

online surveys are user satisficing and mobile device interface issues.

Satisficing is one of the biggest challenges of online surveys. Respondents satisfice when they

answer survey questions to simply meet the minimum requirements of a survey. This involves

answering questions without putting actual thought into their answers during the thinking

process. This leads to answers being superficial and less accurate, resulting in skewed data

(Baker et al., 2010, p. 736). A few examples of satisficing are response nondifferentiation

(giving the same response for all questions) and item non response (choosing neutral answers or

skipping questions). At extreme levels of satisficing, respondents will give false information to

qualify for surveys that offer compensation (Jones et al., 2014). In efforts to reduce satisficing,

researchers have explored viable methods, such as Instructional Manipulation Checks (IMCs),

trap questions, timing controls, and elimination of no opinion responses, to significantly reduce

satisficing.

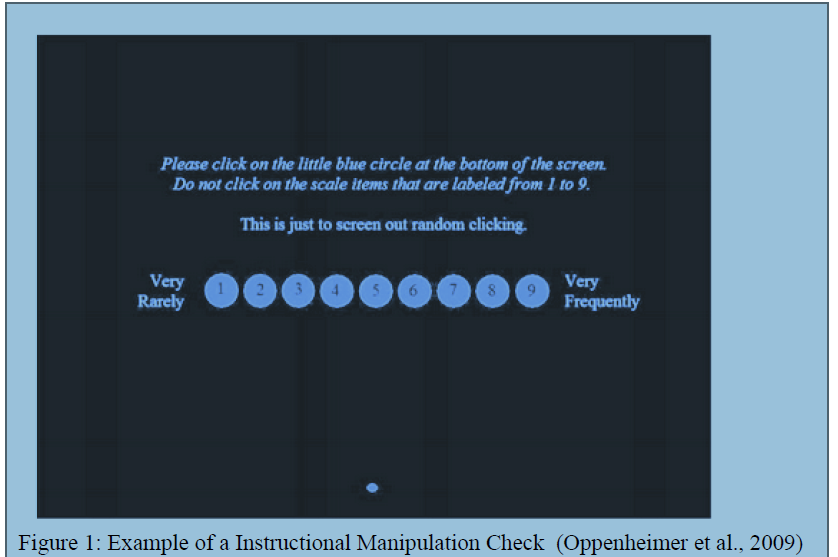

The Instructional Manipulation Check (IMC), developed by Oppenheimer et al. (2009), consists

of a question that appears in the same format as other questions but instructs the respondent to

pick a specific answer or click somewhere else on the screen (fig. 1). It is useful in identifying

satisficers in surveys that rely on respondents to carefully read instructions. However,

researchers should be aware that this method could filter out general respondents who were only

satisficing in that moment and could offend some respondents.

Trap questions (TQs), also known as Red Herrings or Validating Questions, instruct respondents

to choose a specific option among the given responses. Two main examples of TQs are directives and

reverseworded questions. An example of a directive is to have respondents pick a specific

answer in a survey question, while an example of a reverseworded question is to have two

instances of what appears to be the same question but are actually opposites. TQs can be

implemented as a standard in online surveys early on and throughout a survey to alert

respondents, reduce satisficing, and improve data quality. It is also important to note that less

conspicuous TQs, such as reverseworded questions, are not as effective as conspicuous TQs directives)

in reducing satisficing. Although TQs are useful, respondents may find them offensive or disrespectful

(Miller & BakerPrewitt, 2009). It would be helpful to give instructions before the survey to encourage

respondents to take the survey seriously. This approach may help decrease any distastful shock that a

respondent might feel in reaction to a red herring TQ, even though it has been found that providing such

instructions does not reduce satisficing (Kapelner & Chandler, 2010).

Timing controls reduce satisficing by employing a specific amount of time that a respondent

must spend on a question before they can move on. The idea is to slow respondents down

through controlled timing on each question to ensure that respondents take time to cognitively

process and answ er the question. A specific timing control is Kapcha (Kapelner & Chandler,

2010), which fades a question in one letter or word at a time. In this case, the respondent is

further encouraged to process the question rather than just sit and wait for the time to finish. Both

methods were found to improve data quality by 10% (Kapelner and Chandler, 2010). Kapcha

may be more effective on certain populations and on surveys with monetary incentives, but it has

received more negative than positive feedback. It would be important to be aware of how

respondents might react to these timing controls.

Eliminating noopinion

(DK) responses also decreases satisficing. Often, the DK responses such

as “I don’t know” or “neutral” allow respondents to easily satisfice. Respondents realize that

deciding on their real opinion takes cognitive effort, so rather than making the effort to think,

they choose the DK option. DeRouvray and Couper (2002) found that the most effective strategy

for reducing missing data (skipped questions and DK responses) was to remove the DK option

and to prompt the respondents to select an answer before they were allowed to skip a question.

Despite the limitations from this study, researchers assert that the removal of the DK option with

a prompt and an option to skip questions does increase overall data quality.

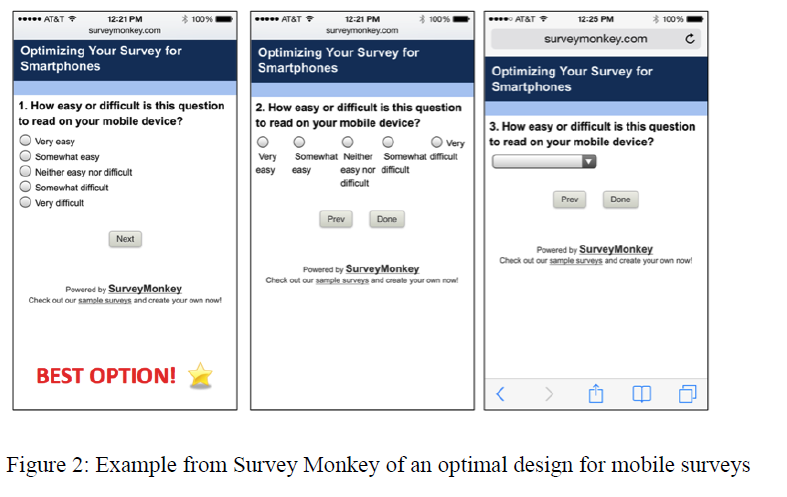

Aside from satisficing challenges, researchers face interface issues with mobile devices. With the

rise of mobile technology, more surveys are being taken on smaller screens. However, many

surveys are not built to be used on these smaller screens, and they lack readable and functional

design. This causes higher break-off rates (respondents not finishing their surveys), slower

responses, and reduced willingness to respond (Peterson et al., 2013). Some solutions are to

create a mobile friendly version of the survey (fig. 2) via online survey creators, to economize

words (Nielsen, 2013), and to preview and pilot test mobile versions before making it available

to respondents. More solutions, from Couper (2013), include avoiding grids, reducing the

number of response options per question, using vertical scales instead of horizontal ones,

limiting typed responses, and minimizing bandwidth requirements (e.g., avoid using videos).

In conclusion, it is impossible to control all the biases that can occur in online surveys. Thus,

administrators of online surveys should analyze their data, look for possible biased elements

within their surveys, and be careful about the interpretations and generalizations made with the

data. We have provided compiled research about different strategies and tools to reduce the

negative impact of challenges faced in online surveys. However, this is not comprehensive, and

these are not perfect solutions to such challenges. Furthermore, more research could be done to

develop more tools that could better improve data quality.